Morgan Elder's

Morgan Elder's

WolverineSoft Game Devlog

Project Light (Subtension) - Fall 2022

Most of my time these first few weeks has been spent updating the project’s audio-related documents and helping new audio team members get acquainted with the project and the process of audio implementation.

Documentation and Organization

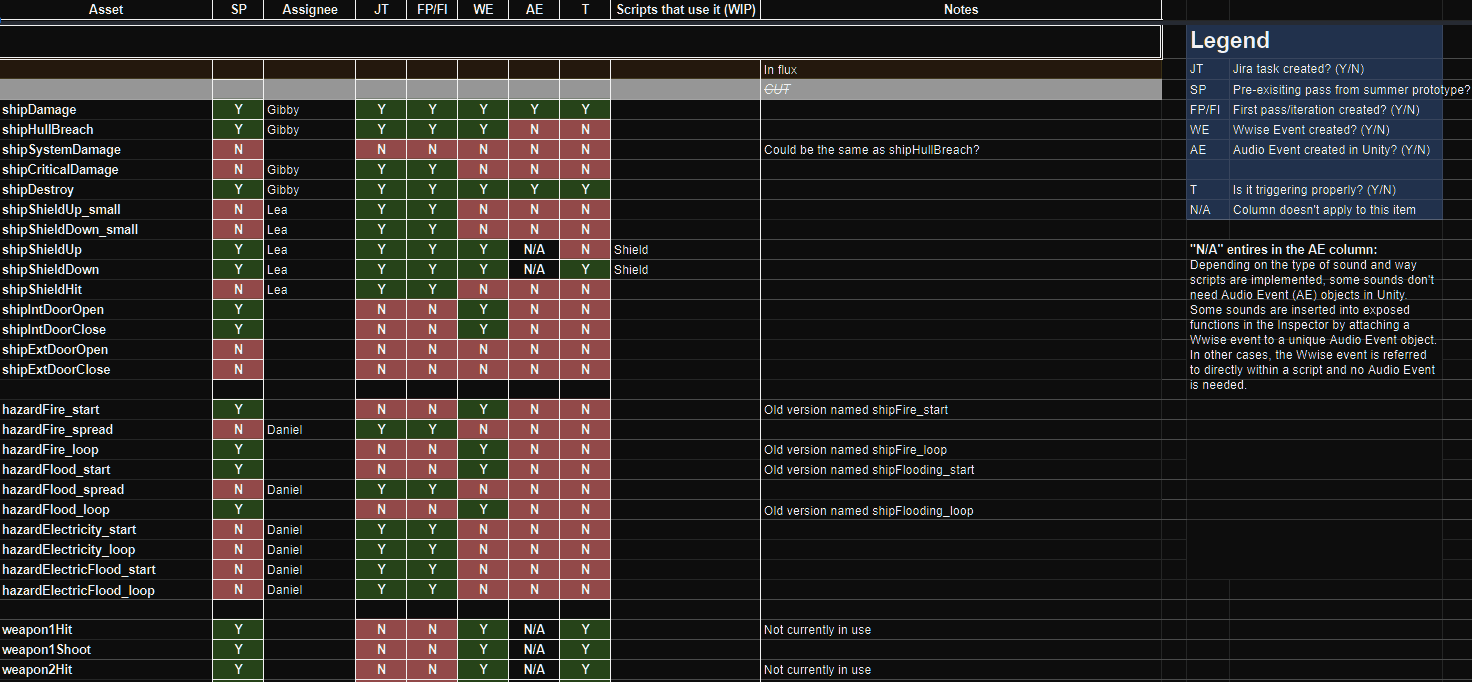

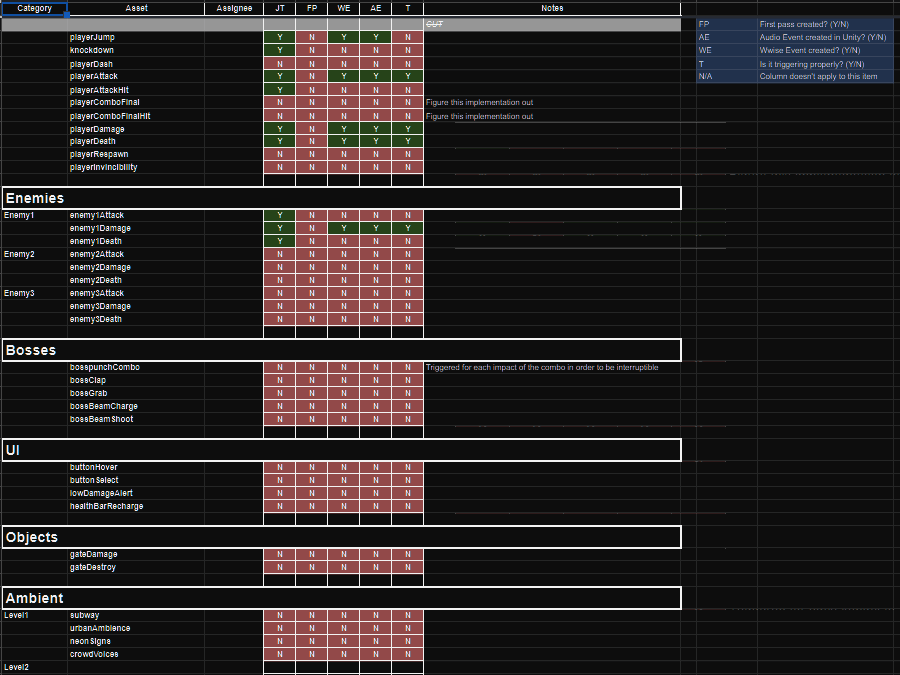

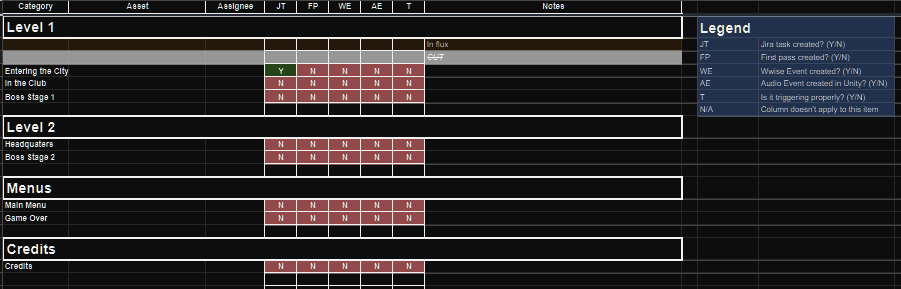

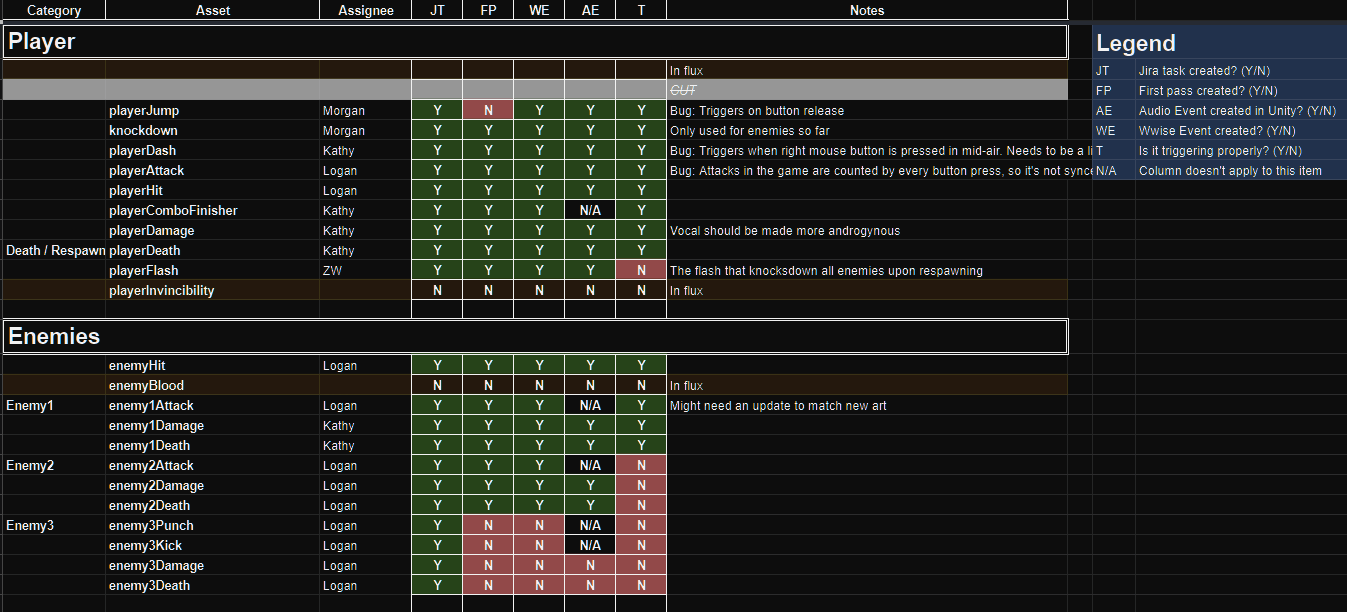

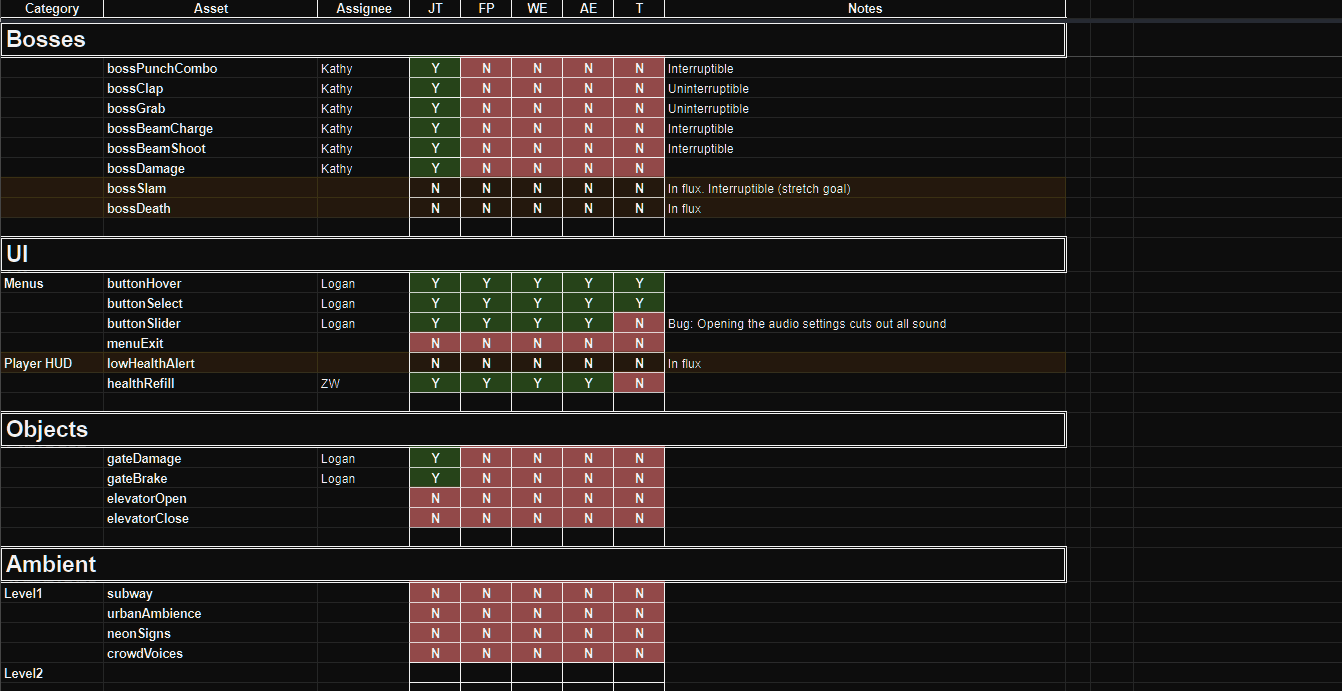

I created a new audio asset list with new columns for which sounds have a first pass from the game’s summer prototype and lists of which C# scripts trigger certain sound effects. Since I want to do better with teaching others how to implement and share those kinds of tasks across the team, keeping track of where sounds are activated from the scripts will make it a lot easier for us to keep track of and test our more complex implementation methods.

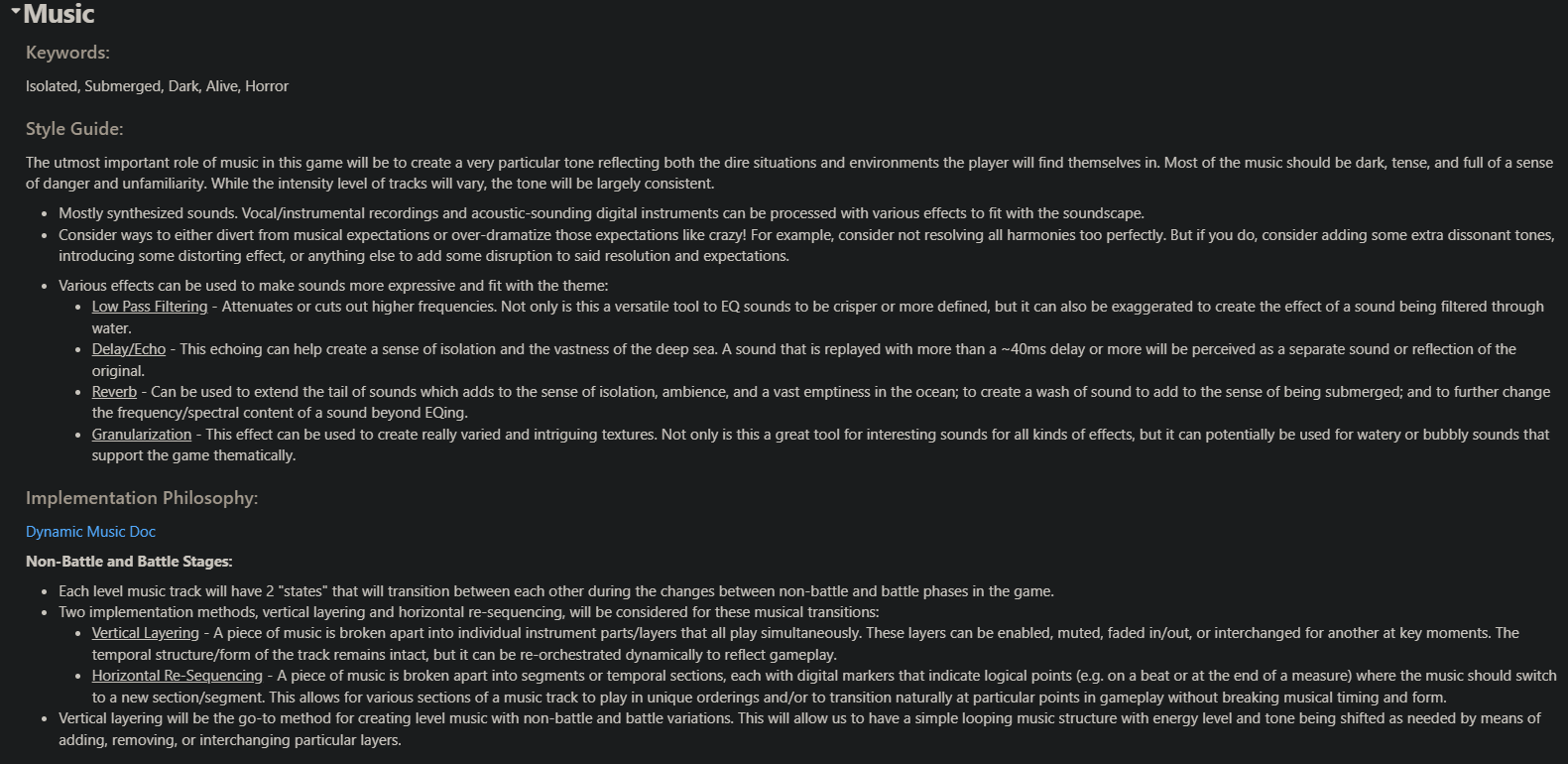

I also added more stylistic details to our audio design document and added to the team’s shared Youtube playlist of music and SFX references. I’ll continually add to this as we define the game’s tone and start making music in the next sprint.

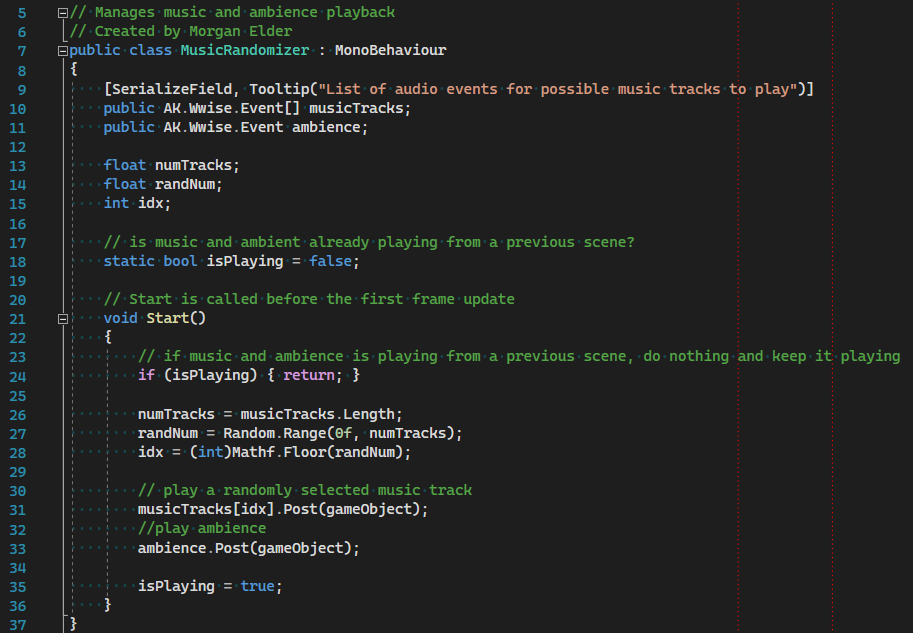

I’ve begun reorganizing the Wwise project to improve folder structure, file naming conventions, and audio signal flow. To make it easier than in previous project cycles for everyone to share and access all of the game’s audio assets, I’ve organized an audio asset folder that will stay on the shared repository for easy version control and management.

Creating SFX

Lastly, I created some new UI SFXs for the game which are a bit darker and lower than what we’ve had previously. I’m excited to test them out next week and hear how they fit into the game!

New UI SFXs

Hours Breakdown

- Creating SFX: 3 hours

- Project Organization and Documentation: 3 hours

- Task Creation: 1.5 hours

- Meetings: 7 hours

- Total: 14.5 hours

At the studio’s in-person work session, I got to work with others on the audio team mostly by helping them with more complex implementation tasks and navigating through the game’s scripts. This production cycle is the first where I’ve involved others into implementation tasks to this level and, while these tasks can become complex and take a lot of time to figure out, everyone is getting more familiar with the methods involved and with the organization of the project, so I’m proud of our progress!

It was nice to be able to meet with the entire studio and audio team in person at our studio-wide meeting week ago since it allowed us to play through the current state of the game and discuss next steps. At this point, even though we figured out several new sound effects to consider and start working on, the programming team hadn’t implemented them yet and it would be a while until we had the corresponding art assets. Because of this, we decided to start working on concept music slightly earlier than I originally expected.

This led to some really valuable conversations about a new direction for music. Previously in the summer, my plan for music was that each level theme would be split into low and high intensity versions for the building and battle phases of the game respectively. However, after working on my Sonic Palette and listening to others’ list of reference audio for that ssignment, I started to consider new possibilities, especially or more ambient styles of music. Everyone else on the team provided their own thoughts and we started to consider more ambient (yet tense) styles music for our battle phases and contrasting this with more rhythmic and ‘moving’ styles for the build phases. I have yet to update the audio design document since we’re still fleshing out these details, but it’s exciting to have a new plan that allows us to create a wider variety of music that better supports the game’s horror theme.

With several new sound effects implemented and having the rest of the team implement their own work, I’ve spent some extra time reorganizing the Wwise project to be easier to navigate for everyone and to allow it to grow for the future as we add more sounds.

Hours Breakdown

- Meetings: 6 hours

- Project Organization and Documentation: 2 hours

- Audio Implementation: 3 hours

- Task Creation: 1 hour

- Total: 12 hours

My work this week involved implementation and audio mixing in Wwise. I worked with programmers at the studio-wide work session to update how UI sounds are triggered for the new drag-and-drop system for purchasing and placing items from the shop. Given playtest feedback that the UI sounds are heard too often and are too bassy to be heard so frequently, these UI sounds are something that I need to do a lot of tweaking with by reiterating the sounds themselves and being more selective with which UI elements have sounds. I’ll be making new versions of the sounds next week so, for now, I’ve added a high-pass filter to some of these sound effect to cut out the bass and removed UI sounds from several buttons. I also made efficiency improvements to some audio scripts that I had work on in the previous sprint.

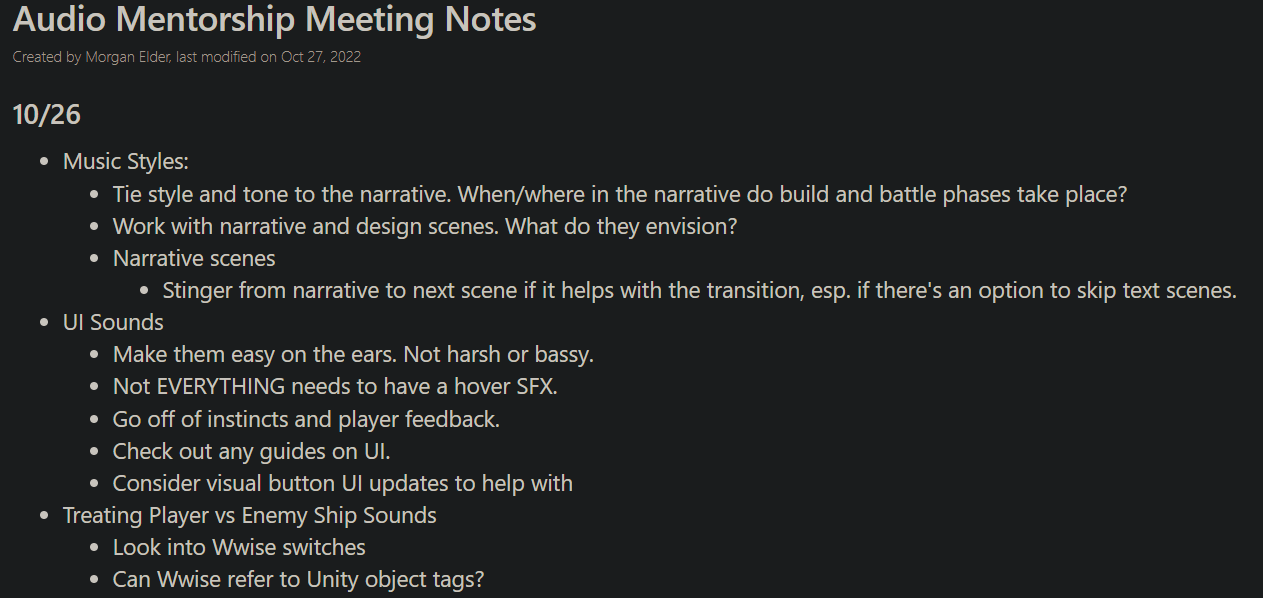

The audio team has started having weekly mentorship meetings with Crystal Lee who works as an audio designer at Schell Games and I used to work with her in WolverineSoft’s audio department. The conversations have focused on discussing solutions to various audio design problems we’ve been tackling like adaptive music, runtime audio effects, and audio spatialization. She’s been a great resource for feedback and great ideas for both stylistic decisions and technical solutions!

I coordinated with the narrative team over the last few weeks

and we have gotten a nearly completed script for narrative

scenes! I’ve gone ahead and booked two sessions in Audio Studio

A at the Duderstadt Center so that we can have the best

resources possible for the multiple character voiced diagolue.

That studio space has a large tracking room separate from the

mixing board, several isolation booths, personal headphone

mixers, and an array ot amazing microphones. I’m really excited

to get to get other members of the audio team involved in both

audio recording and voice acting direction! Very soon into the

next sprint, we will be selecting members from across the entire

studio to be our voice actors.

At the end of this sprint, it has finally hit me how we’re

nearing the end of the project quickly as well as how much work

the audio team has gotten done over the past few months. We’re

going to keep going strong into our last production sprint and

we’re on schedule to get all of our first-iteration sound

effects and music done by the end of the following sprint!

Hours Breakdown

- Studio-Wide Meetings: 4 hours

- Mentorship Meetings: 1.5 hours

- Audio Implementation: 3 hours

- Studio-Wide Work Session (Implementation & Audio Design Discussions): 4 hours

- Task Creation & Planning: 1.5 hours

- Total: 14 hours

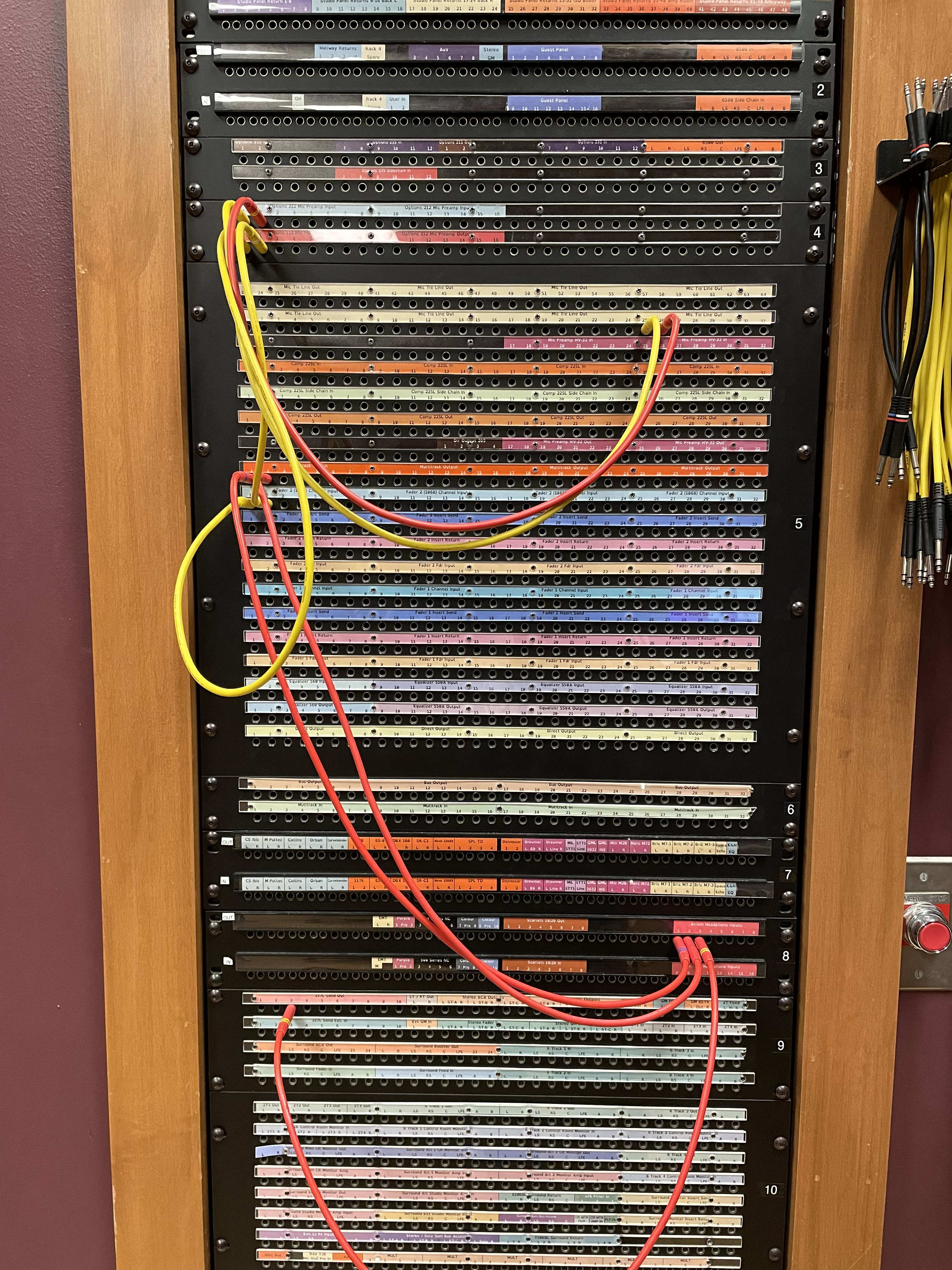

Just before the Thanksgiving break, the whole audio team was

able to come into the audio studio at the Duderstadt Center for

our second and last recording session for voice acting.

I arrived at the studio earlier than everyone else in order to

set up microphones, personal meixers, and route all of the audio

into the control room. While I ran into a few set up

difficulties the first session after not having used the studio

in some time, this last session I was able to go through all the

motions and refer to my previous patchbay routing scheme super

quickly!

The rest of team arrived at the studio in time for us to

attend the remote studio-wide meeting and we had our department

meeting later in the day between recording our voice actors.

We got to record 4 actors, all volunteers from within WolverineSoft

Studio. We recorded one actor at a time, but had a second mic

monitored for Lea as she ran through lines with the actors. We

considered a few different mics and ended up using the

Electro-Voice RE20. Lea was familiar with this model for being

used for older radio broadcasts. We selected it for the fact

that it sounded slightly less bright and modern in comparison to

other commonly used mics for podcasts, which makes sense as these

voices are going through the comms of a submarine. The voices

and sound quality all turned out really well!

Hours Breakdown

- Mentorship Meeting: 1 hour

- Audio Implementation: 2 hours

- Voice Over Recording Session: 5 hours

- Task Creation & Planning: 1 hour

- Total: 9 hours

The audio team had a very busy and productive final stretch to the end! We got voice over fully implemented, varied the ambiences through the game, improved both scripted and dynamic music transitions, and did a lot of polishing mixing and tweaking of implementation.

Editing the Recorded Dialogue

My first major task of the last 2 weeks was to cut up half the dialogue into discrete lines and takes (Lea took care of the second half), then I took all our cut dialogue and applied various effects in a batch process treating the audio to be clearer and more consistent in volume. More specifically, it was a process of low-pass filters, noise reduction, loudness normalization, compression, noise gating, and amplification. Once I had settled on all of the effects and their settings, the batch processing allowed me to efficiently treat the 70+ takes that we ultimately selected for final use in the game.

Music and Ambience Transitions

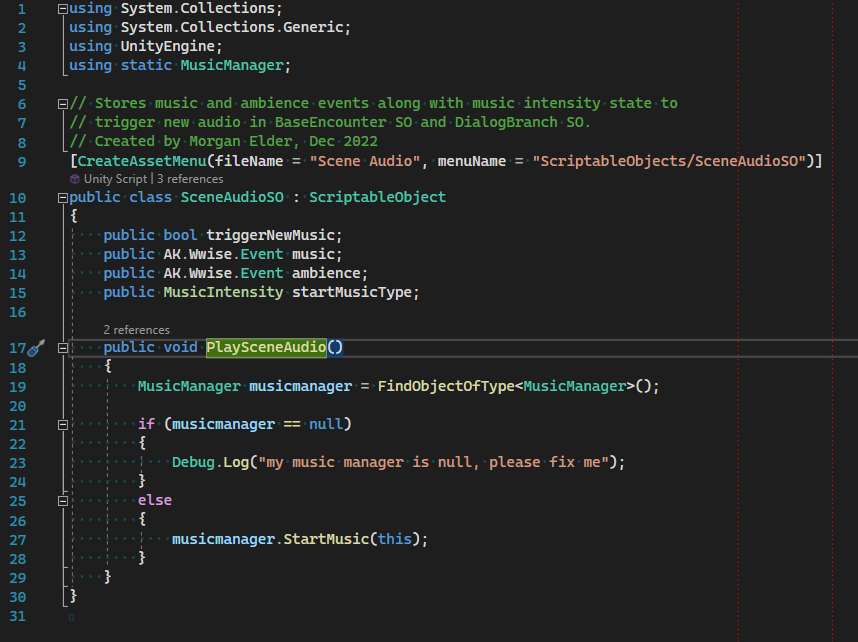

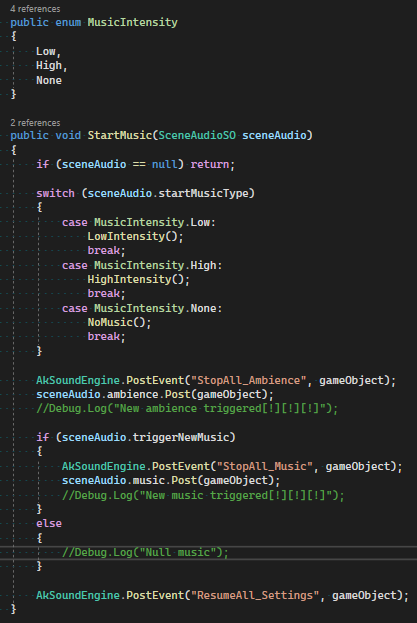

The most challenging (and time-consuming) final task I had was to

improve music transitions. Certain music and ambience Wwise events

were selected to be triggered at certain points in the game

(e.g. specific moments in dialogue, upon switching to a build or

battle phase in gameplay). Previously, audio events were added as

member variables of a specific type of Encounter SO which was a

little limiting since some of the key audio transitions we wanted

occurred somewhere in between these overarching encounters.

Since there were just a few Unity

scenes and unique features of story and gameplay progression

were handled by a series of various Scriptable Object (SO)

classes, I created my own SO, named a Scene Audio SO, that

allowed me to insert audio events with flexbility. This Scene

Audio SO contained audio events and other variables that would

be passed onto my main Music Manager script, and this SO was

added as a member variable to all of the various other SO's that

managed dialogue branches and level progression. I could now put

new music and/or audio wherever I want very accurately!

Final Thoughts

A few other things I worked on towards the end of the project

included fixing small audio bugs,

improving the looping and transitions of our several dynamically

evolving music tracks in Wwise, and improving the special audio

transitions and effects for when the game is paused and upon

finishing a battle.

The entire rest of the audio team was also really productive and

hard working until the very end. We got so much done this semester

and I'm really glad to have worked with such a talented and

dedicated group for my last semester as the audio lead in

WolverineSoft Studio. I'll be in the studio one more time next

semester, before I graduate, as a member

of the audio team and am looking forward to working with the rest

who are able to return to the team!

Hours Breakdown

- Studio-wide Meeting: 2.5 hours

- Audio Implementation & Bug Fixing: 10 hours

- Voice Over Audio Editing and Processing: 3 hours

- Task Creation & Planning: 1 hour

- Total: 16.5 hours

Project Rage (Twin Blades' Vengeance) - Winter 2022

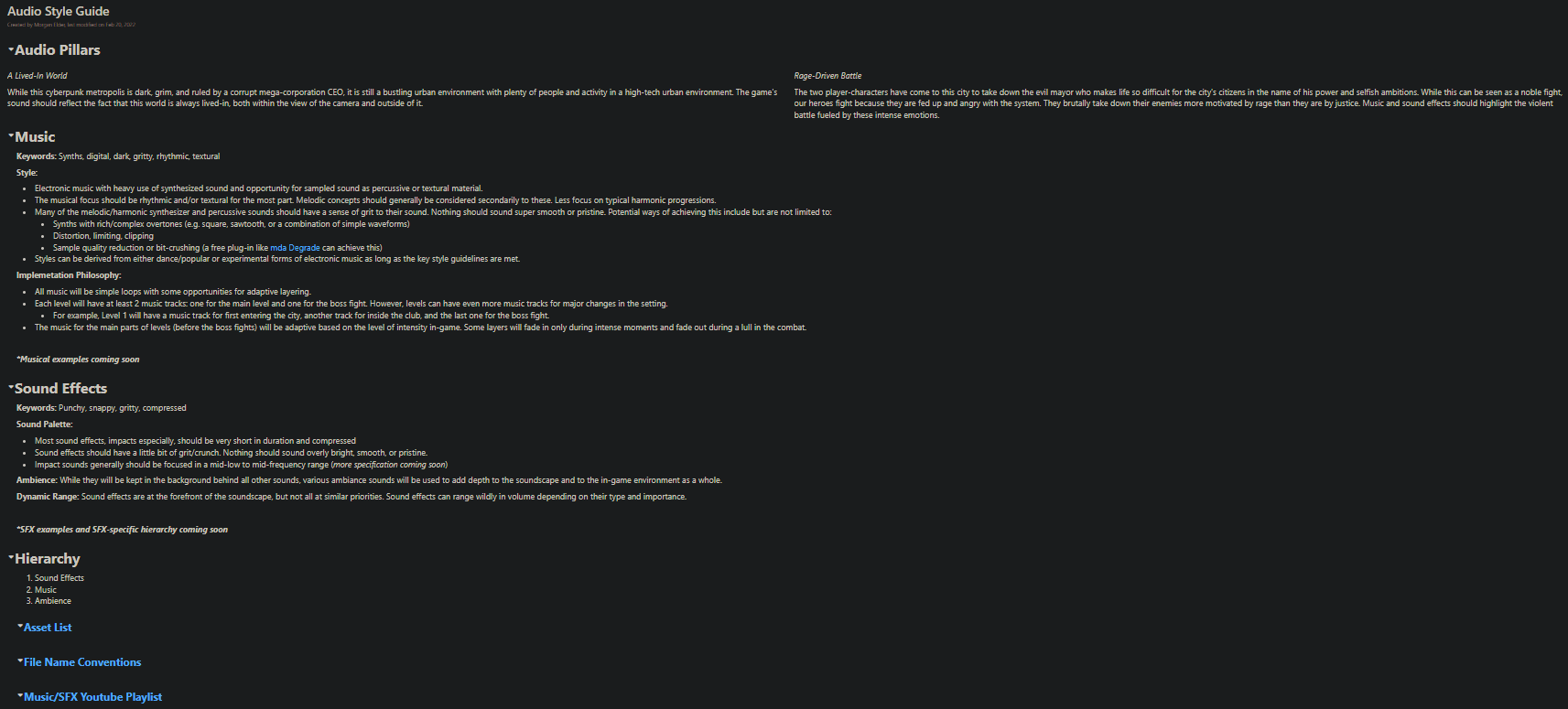

With temp sounds in the game and several references gathered by the audio team in pre-production, during this sprint I focused on beginning some of the important documentation the audio team will refer to and update throughout the project as well as having the audio team generate brief snippets of concept music.

Concept Music Snippets

For this sprint I gave each of the four audio team members

(including myself) the task to create 2 approximately 30-second

music tracks based on the game’s themes and the game and movie

references we had gathered the week prior.

While working on these two music concepts, I took my time to

explore some synth instruments I haven't used before and

challenged myself by making my own custom synths with minimal

use of preset settings. I used this low-stakes opportunity to

try some new things: making a sequencer in the Serum plug-in,

using sampled video game music as a sampler instrument, and

trying some musical styles I'm not used to. I prioritized

creating contrasting compositional ideas down, so I didn't worry

about mixing very much. I kept the cyberpunk theme in mind while

making these without worrying too much about the fine details of

our game in this early stage it's in.

Concept Music 1: Computer Club

Concept Music 2: Intermission in the City

Documentation and Research

The audio asset list and style document were two important pieces of documentation that I created this sprint. They both began with research which included watching and taking notes on a GDC presentation on how to design for sound in a game, reading articles on how to create a design document, watching tutorials on sound design techniques, and watching gameplay of several games as references. I also originally planned to complete some official Wwise tutorials to solidify and expand upon my understanding of audio implementation, but this has to be delayed until the next sprint due to a lack of time.

The audio asset list contains all sounds that I can imagine being in the game given the current design details and understanding of the game in this early stage. It will be a living document as more details are clarified and as I continue to make decisions on what sounds are needed.

Similarly, the audio style document will continue to be updated as I come to learn new details and make more decisions as the project goes on. As of now, it’s a good start with both broad and specific ideas for the game’s music and sound effects. However, I haven’t finalized the video references to other games that I would like to include in the document as benchmarks or comparisons to what I’m envisioning, so adding these will be my next step.

Hours Breakdown

- Music Snippets: 6 hours

- Research and Documentation: 6 hours

- Task Creation and Organization: 1.5 hours

- Meetings: 3 hours

- Total: 16.5 hours

Implementation

A lot of my time was put into implementation. The rest of the team made a lot of great assets, so I got to put in nearly all of the sound effects for the player, all of the those for 2 of 3 enemy types, menu UI sounds, and I set up a few other sounds in Wwise and Unity that are ready to be put in the game the moment a few more systems are implemented by the other teams. With the team’s great work this sprint, I got to turn a lot of cells in the audio asset list from red to green!

I ran into a few challenges implementing that left me with less time to work on the SFX and music assets I planned to complete for this sprint. The UI sounds took the longest to implement since I had to add new components for each button object in the game but when I tried to merge my branch, there were a lot of merge conflicts. I started from the beginning and had to redo it two additional times until I figured out a way to implement that avoided the conflicts. This whole process was a lot more time-consuming than I expected, but I learned more about how to implement these unique kinds of sounds and will be more knowledgeable for future situations.

I also added in the background music for the first level with a temporary implementation that plays the intro section once and loops the rest of the piece. In one of my meetings, I talked a bit with the design team about setting up a system that would allow for dynamic music that would rise and fall in intensity along with the gameplay, so I’ve started planning for that final implementation.

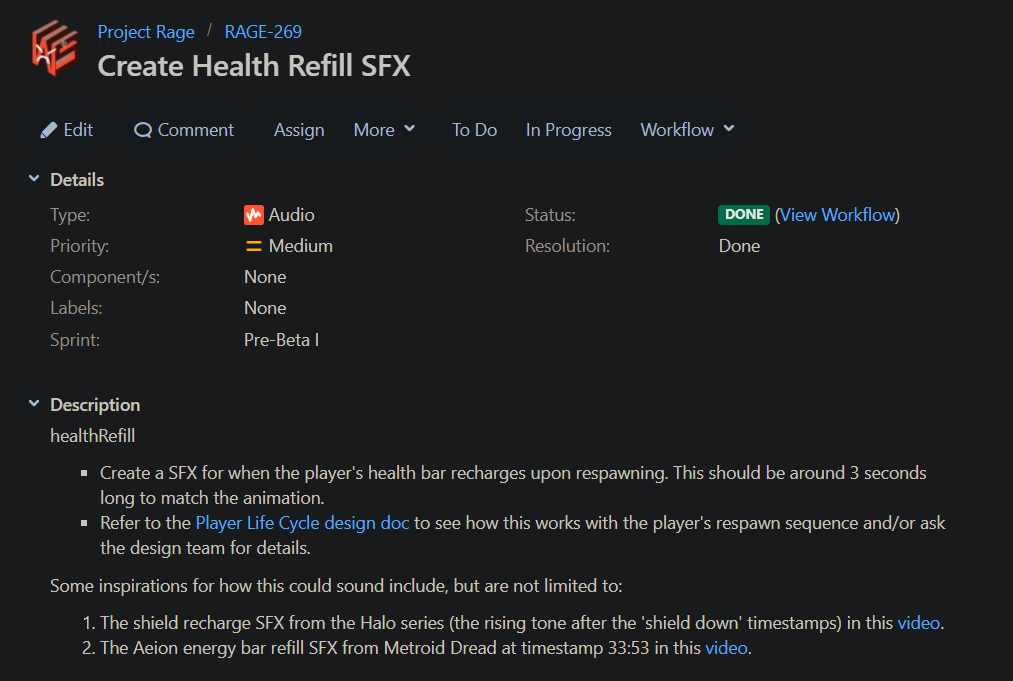

Task Creation and Finding References

As we had a lot of assets planned for this sprint, I began creating Jira tasks for each individual asset rather than creating a single task for each audio team member which included all of their assigned assets for the sprint. I also worked on finding a balance between simplicity and detail in each task description with a list of simple bullet points followed by an extra section with a few extra details or references as needed. This way, communication on the essential details was done immediately which allowed for the others to get started on their tasks quicker and easier. In the past, when I tried filling up task descriptions with longer and more convoluted descriptions, the extra details didn’t add much and had to be better clarified in later conversations. I still went back and forth with some of the audio members sharing ideas and clarifying details on tasks throughout the sprint and these felt more productive than before since these conversations were helpful for specifically sharing additional references and discussing finer details.

The audio asset list contains all sounds that I can imagine being in the game given the current design details and understanding of the game in this early stage. It will be a living document as more details are clarified and as I continue to make decisions on what sounds are needed.

Creating SFX

I didn’t create too many assets this sprint, but I made a few variations of a knockdown SFX. There was no temp version of this sound before this, so adding it in created some additional feedback that was surprisingly satisfying and cool to hear!

Two Knockdown SFX Variations

Hours Breakdown

- Implementation: 10 hours

- Creating Sound Effects: 2 hours

- Task Creation and Finding References: 3 hours

- Wwise Tutorials: 3 hours

- Meetings: 3 hours

- Total: 21 hours

During the first week of this sprint, I had to focus on finishing up a

time-consuming project outside of school and managing other responsibilities,

so I was catching up on work for all my classes the latter week of the sprint. This

made it so that I didn't get to put significant time into studio tasks until towards

the end of the sprint and I realized too late that all of my tasks for the sprint would take more time

than what I had remaining before the next sprint began. I'll be making up for this by trying my very

best to get my most urgent tasks done at the very beginning of the next sprint.

While most of my work went into the general

implementation of the rest of the team's new audio assets, I also got to

optimize the organization of Wwise soundbanks, fix some audio bugs, and get

a good start on my music tasks.

Implementation and Bug Fixing

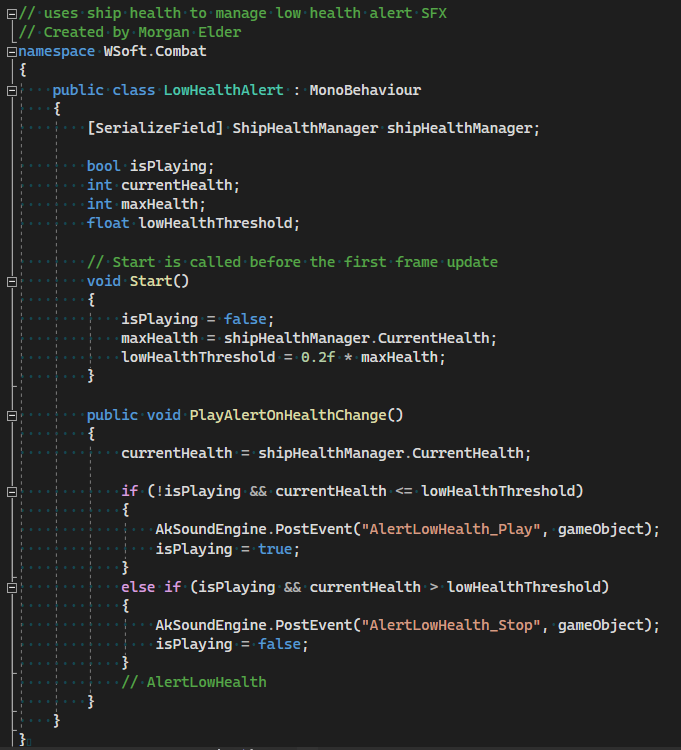

Besides adding in a lot of great audio assets completed by the rest of the members, I worked on fixing a few audio bugs like how the player's attack and dash sound effects would trigger both on button press and release rather than just on button press. This involved editing scripts to add exposed elements in the inspector for me to add audio events in the right place in a way that was new for me, but I managed to successfully update the scripts, add in the sounds, and fix the issue.

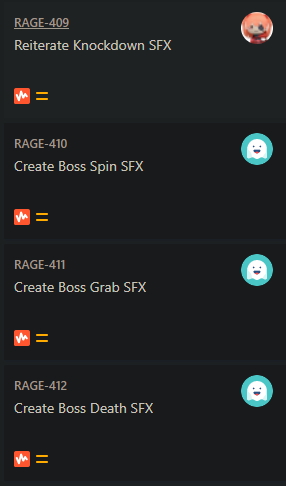

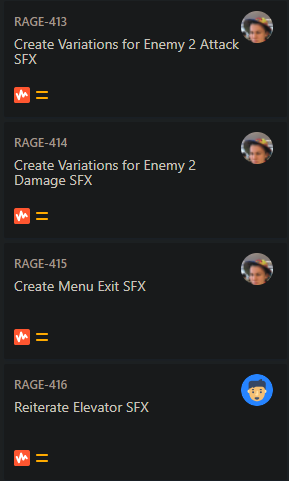

Task Creation

I just assigned the very last of the game's must-have sound effects for myself and

the other audio members to create over the next week-long sprint along with some

tasks for reiteration on sound effects and adding additional variations to preexisting sounds. It's satisfying to know that we're at a

pretty good spot based on our timeline and can expect to be able to focus

all of our energy to reiteration and perfecting the game's sound for our

final Pre-Gold sprint.

Hours Breakdown

- Implementation: 5 hours

- Creating Music: 4 hours

- Task Creation: 1 hour

- Meetings: 3 hours

- Total: 13 hours

For the past two weeks, I put in a lot of time for both creating assets and implementation to make up for the moment in time a few weeks ago where I was busy outside of the studio. I got a lot done!

Music and SFX

I created a new arcade-inspired jump SFX and two music tracks one for the main menu and one for the Level 2 city area.

Jump SFX

Main Menu Theme

Level 2 Theme

I tried making the Level 2 theme reflect that this area was supposed to be wealthier and more refined than Level 1, which had more energetic music, by using lush synth chords. But it includes a second section with more active percussion and a sequenced synth line that's used for the 'high-intensity' moments in the game. I unfortunately didn't get a chance to mix these tracks to perfection, but I made up for this by focusing on adaptive music which is a feature that I believe makes video game audio especially unique and impactful.

Ambience

Other members of the audio created three sets of ambiences for me to use for Level 1, the Level 2 city area, and the Level 2 boss fight in the office area. Each ambience was composed of 2-4 individual tracks to create a rich soundscape of general urban ambience, flying cars, machinery, howling wind, and more. In order for these ambiences to sound even more convincing, I granulized each track, or, in other words, I split each of them up into several sections to play back in randomized sequences. Additionally, for the tracks that include more discrete sounds (e.g. car honks), I applied randomization to the volume, pitch, and low/high cut filters to make each instance unique from one another.

Implementation

My biggest commitment in the past week was fine tuning implementation

and adding some cool new systems, like the adaptive music. From

the beginning, I had hoped to have adaptive music in the game and

I managed to realize this goal exactly how I envisioned it. I set

triggers throughout the levels to change a binary 'Intensity' state

between low and high states.

I composed the Level 2 theme to work well

with the vertical layering technique so that switching between low

and high states is as easy as switching out a layer for another or

muting/unmuting other layers in the mix. For example, there is a

second version of the drums track that adds delay and other

effects to sound fuller and there's also a glitchy synth line

that comes in only during the high-intensity parts.

The Level 1 music was a lot more difficult to implement since it

was composed of two sections (plus a transition segment) which

had too many contrasts to smoothly switch between via layering.

To combat this, I used a horizontal re-orchestration method where

these two main sections with the transition section were sequenced

together in realtime depending on the in-game intensity level.

In other words, the first section would loop on its own as long

as the intensity state is set to low. The moment the player enters

a high-intensity level, the transition section begins to play on

the next measure and this moves into the second main section which

loops on as long as the high intensity level is maintained. Once

another low-intensity state is triggered, the music waits until the

next measure to switch back to the first, low-intensity section.

I set up the high-intensity moments to happen during boss fights

and moments where the camera is locked in place for the player to

fight several waves of enemies before continuing. It's really fun

and satisfying to hear how the music's enemy rises and falls with

the game play.

I also implemented one more binary audio state which I called

'Ambience Zones'. In these areas, the music drops out to nearly

nothing and the background ambience is turned up. This was made

specifically for one key moment in Level 2 where the player crosses

a bridge and is given a chance to appreciate a clear view of the

background sceneray without any enemy encounters. During this moment,

in order to clarify to the player that this is a brief area of

respite for them to appreciate sight and sounds, I enabled the

ambience zone so that diegetic ambient sound would become the

focus until the next enemy enounter.

Hours Breakdown

- Implementation: 12 hours

- Creating Music: 9 hours

- Task Creation: 1 hour

- Playtest: 1 hour

- Meetings: 3 hours

- Total: 26 hours

Project Tower (Ragnarok TD) - Fall 2021

Playing Bloons Tower Defense 6

To start this sprint and to prepare for my first deliverables, I

finally sat down to play Bloons Tower Defense 6 for the first time.

Not only was it important that I become more familiar with the game

that Project Tower is based on, but I specifically wanted to take a

look at the approach to audio in BTD6. I accessed what I think does

and doesn't work in the game's audio as well as which aspects I'd want

to adapt to Project Tower.

I noted that the music overall is very cheery and energetic. The main

menu music is pleasant and upbeat which fits with the bright,

colorful visuals and laid-back feel. Once I entered the first level,

the music had a very similar energy to it.

While the music is pleasant, inoffensive, and appropriate with the

game's theme, I felt that it was a bit stagnant and maybe too

consistent in all aspects. The meter, mode, instruments, and overall

musical style were all largely unchanging between tracks. It was all

a bit too samey for my liking, especially for a soundtrack that

demands some level of attention from the player with how high-energy

it is.

This music struck me as the kind that a player would have no problem

turning off if they were to play for long periods of time since it's

so repetitive and unchanging. To avoid making easily mutable or

uninteresting music for Project Tower, I want to focus on making

appropriately long music loops, varied music tracks, and a polished

sound overall. I also would love for the chance to implement an

adaptive soundtrack that would rise and fall in tension in accordance

to player progress or level difficulty, but I understand that this

may be a stretch goal and I'll have to further discuss it with the

rest of the audio team.

The sound effects for placing units and popping balloons were

appropriately simple and did their job well and I hope to replicate

their style for Project Tower. However, the fact that the balloon

popping SFX is so constant, I want to be sure that whichever sound

Project Tower uses for defeating enemies never gets tiring to hear by

means of adding variation.

There are voice-over sound effects for the powerful Hero monkey units

in BTD6. While this has inspired me to consider voice-overs for

narrative cutscenes or as feedback sounds during gameplay, I really

disliked the voice-over used in BTD6. It's a little difficult for me

to point my finger on why, but something about the voices and lines

given to these monkey characters struck me as campy and inconsistent

with the rest of the game’s theme. I'll be able to have a better idea

of what not to do if voice-overs are ever added to Project Tower.

Meeting with the Audio Team

I've begun having weekly Tuesday meetings on Discord with the two others working on audio, Emily and Crystal. In these meetings, we discuss the creative direction and ideas we have for the game’s audio, divide the audio-related issues of the given sprint amongst each other, set short-term deliverable and learning goals for ourselves, plan for upcoming tasks, provide feedback on each other’s work, and more. It’s been a really helpful opportunity to share and develop new ideas, have a smaller team with which I can discuss audio-related issues and goals, learn from the skills of others, and feel organized and motivated as an audio team.

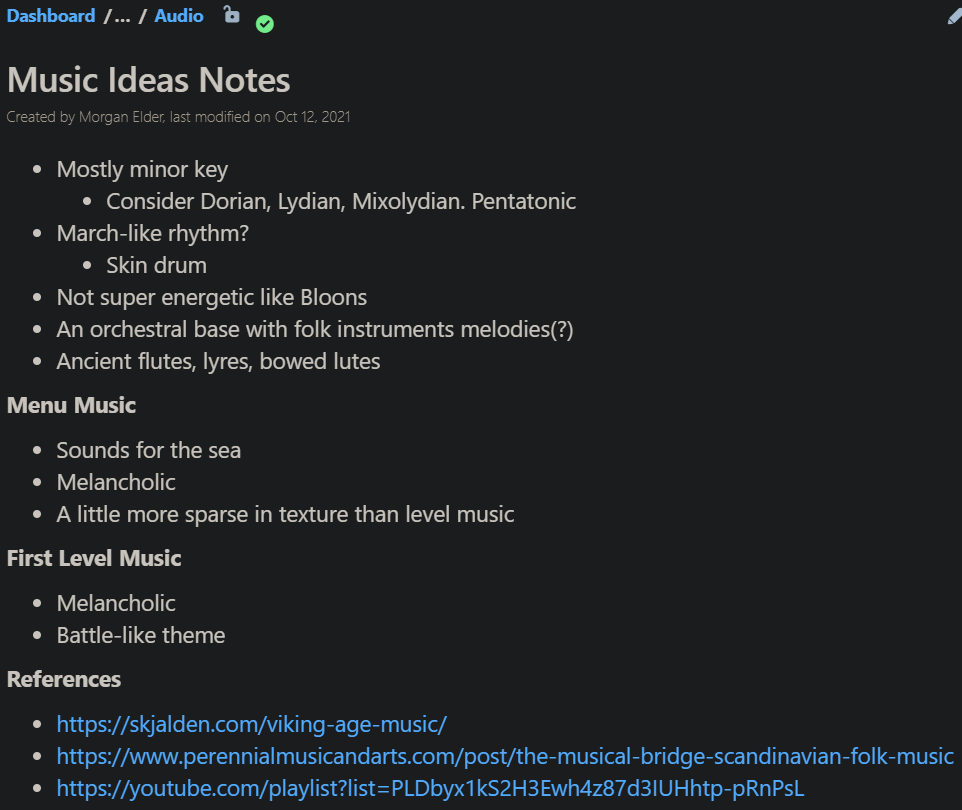

As I was tasked with creating the first draft of the game’s main

menu music and Emily was responsible for the first level music, we

met an additional time between our two Tuesday audio team meetings

for this Pre-Alpha I sprint. We wanted to collaborate on identifying

the beginnings of Project Tower’s musical identity. Since the game’s

theme that of Old Norse mythology, we researched and discussed which

instruments, musical modes, and styles to use in order to reinforce

the game’s theming. As the tone of the project was recently refined

by the team working on the game’s narrative, we were also able to

discuss how to refine our own musical devices and tone. We created a

page in Confluence to record our ideas to refer to later on. This

also serves as a living document as we find more resources and come

up with more focused ideas.

Additionally, we started a Youtube

playlist full of relevant reference music, video game soundtracks,

traditional Scandinavian music, and otherwise, to find inspiration

for our own music.

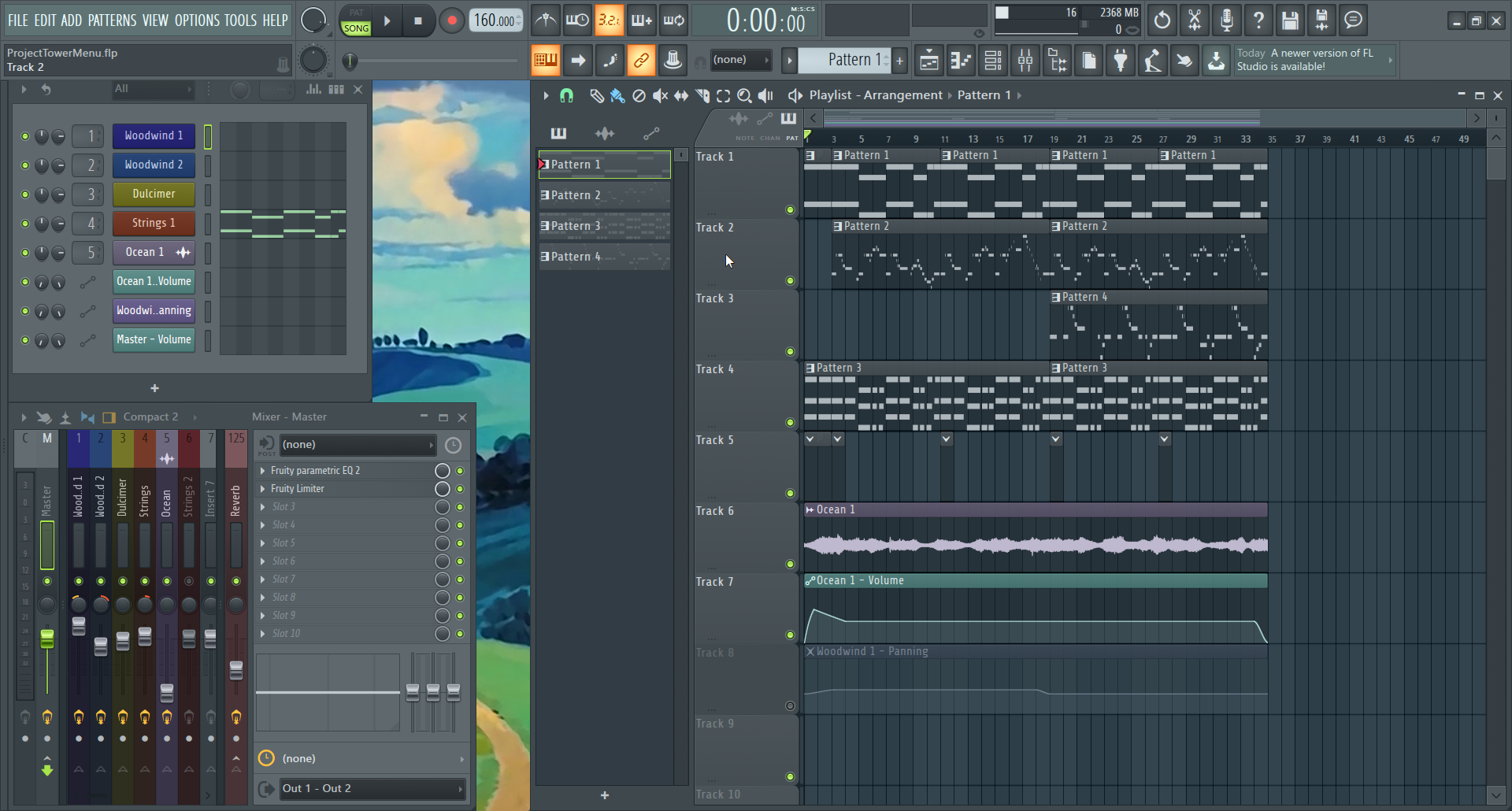

Composing the Main Menu Theme

Composing the first draft for the main menu

music began with several challenges and inconveniences, but turned

out well in the end! The process began with me experimenting with

various audio tools and plug-ins to see what kind of interesting

sounds I’d be able to use for the project. I wasn’t yet familiar

with these newer tools, so I then switched to using tools I was more

familiar with and ended up with a product that I was satisfied with.

I referred to the rough notes on the musical devices to use and tone

to go for, listened to Emily’s draft of the game’s first level

theme, and listened to various reference music (mostly examples of

Scandanavian folk and medieval music). These greatly helped inform

the direction of my own work and made sure that my menu theme would

coincide thematically with the level music as well as with the rest

of the game.

With the notes and music references, I sought out instruments that resemble those used in old Scandinavia while using low strings as a drone that ground the piece. I found the instruments I wanted but wanted to make these synthesized instruments sound more convincing and expressive. However, the particular device I needed for this wasn’t working as expected, so I ended up spending a long time troubleshooting without being able to resolve all of the issues. I ultimately had to abandon the device for now and do the best I could with more traditional and limiting methods for the time being.

Main Menu Theme Draft

In the end, I think the main menu music draft I’ve produced sounds like a solid start with a melancholic tone, traditional-sounding instruments, and sound samples of the sea all to reinforce the game’s tone and help create a musical identity within the game’s soundtrack.

Hours Breakdown

- Playing BTD6 for Reference: 1 hour

- Audio Team Meetings: 3 hours

- Composing music and troubleshooting DAWs: 7 hours

- Experimenting with creating SFX and instruments: 2 hours

- Total: 13 hours

Overall, it was challenging for me to complete all of the tasks I originally set out to do due to some unexpected obstacles in my progress and several time-consuming priorities in my life that unfortunately coincided with this sprint. Despite the challenges, I was able to create some sound effects and ambiances for the game, I made some strides in learning audio implementation, and the audio team as a whole made good progress in terms of plans for our next tasks. With a new sprint starting and a more typical schedule, I’ll be working hard to plan ahead and complete all my tasks to make up for the ones I missed this sprint.

Audio Team Meetings

During this sprint, the audio team had two of our weekly meetings and

I had one more with Crystal to discuss audio implementation. Beyond

the typical discussion of choosing tasks and talking about our

progress, something we focused on was creating a more unified sound

for the game by finding free audio plug-in software instruments that

all of us could use across our different DAWs. We took into

consideration the sounds and instruments used in our music drafts

until this point as we decided on new accessible, cross-platform

instruments to use. We narrowed down all of the main instruments we

would need to 1-3 software instruments each and this will be refined

as we continue to experiment with these.

I’ve been trying to learn more about the audio implementation side

of things, so I had an additional meeting with Crystal who taught me

the basics of making and managing new branches of the project,

using Wwise, and integrating the Wwise project into the game’s

Unity project. I learned a lot and was able to implement a

temporary sound effect into the game during this meeting, so I’ll

continue practicing by completing Wwise tutorials and I’ll soon be

helping Crystal with implementing the sounds and music we create.

Recording Ambiances and Creating SFX

I was tasked with creating some ambiance audio

for the game, so I planned to go out to capture some field recordings

to have more of my own material to work with. It's not fully decided

how the ambiance tracks may be used in the game, but the audio

team has considered using ambiances in place of music at key moments

in the narrative scenes where it may be appropriate, for as a

low-volume background noise along with music during the levels as they

change in scenery. One of the sounds I was most looking forward to

recording was that of insects and other animals at night. However, the

day I had the chance to go out at night to record, the temperature had

just dropped dramatically and all of the vibrant insect sounds I heard

the day prior had all fallen silent as they died or went dormant for

the winter. I also wanted to record the sound of wind rustling the

leaves of trees and bushes as the winds in the area picked up and I

considered how that could be a cool sound to have in order to recreate

a virtual environment in-game. Once I listened back to these

recordings, I realized that they were unfortunately unusable. The

small portable recorder I have doesn’t have a windshield, so all of

the recorded wind noises are really distorted.

Because of these obstacles, I ended up using mostly old nature

recordings of mine which were a little limited but they worked well

for what I had. So far, the only ambience track ready for

implementation is that for crickets.

Crickets Ambiance Draft

I’ll record more soon, rely on editing preexisting free-to-use

sounds from online, and consider synthesizing my own ambient tracks

for the future.

I also worked on creating some sound effects by using an online

free-to-use sound as a starting point and heavily editing them. I’m

familiar with sound design for music, so transferring the skills and

knowledge of audio effects to transform sounds into the sound effects

that the game needs has been a fun challenge. Here’s the first draft

for the sound effect of a bulk enemy ship breaking down.

Bulk Enemy Breaking SFX Draft

Hours Breakdown

- Playing BTD6 for Reference: 2 hours

- Audio Team Meetings: 4 hours

- Recording Environmental Audio Samples: 2 hours

- Editing Recordings and Creating SFX: 4 hours

- Total: 12 hours

Audio Team Meetings

In the audio team’s last meeting we updated our list of essential

tasks for the rest of the pre-beta period. To finalize our list, we

played some of the sandbox mode in BTD6 together on the Discord call

which led to a lot of interesting conversations on which sounds we

should include or omit from our own game.

During this conversation, I brought up some of the stretch goals we

had mentioned at the beginning of this semester so that we could

decide on which of these final goals we wanted to commit to. Since

these stretch goals would be time-consuming tasks, planning for these

would have to start early. The two main considerations were dynamic

music and voice acting. Months ago, we briefly talked about the

idea of having music that would dynamically change in accordance

with the intensity of the game on-screen. It would be

constructed by musical layers that would build up or thin out

over time. However, this would’ve added a big task for the

programming team to create triggers for these musical moments

based on some kind of in-game statistic. Also, the audio team

would’ve had to make a lot of changes to the music we already

had in the game.

Therefore, we began discussing a plan to implement voice acting

for the 2 main visual novel narrative scenes in the game. This

wouldn’t add too much additional work for the programming team

and it would do a lot to the game in making the narrative scenes

more engaging and expressive. We have yet to figure out all of

the specifics, but we’ll find 2 people, either from within the

studio or externally, to act as the two voiced characters. I

have access to and certification at the audio studios at the

Duderstadt Center, so we’ll book one of those and should be able

to record all of the lines in one session. I’ll be doing the

recording and editing of the dialog lines, and I’m really

excited about this new feature!

Finding New Strategies for Creating SFX

Most of my time working on tasks this sprint was recording and creating sound effects. While I have a bit of practice with sound design for music, designing sounds to sound the way I heard them in my head was more challenging than I originally expected. At first, I tried really hard to make new sounds from scratch by using simple oscillators and effects but I’m not experienced enough with digital sound synthesis to have figured it out in a reasonable time. After talking to the rest of the audio team about my challenges and listening to their processes, I decided to simplify my own process. I began using the preexisting free-to-use sounds that we had in our game as temporary assets, my library of free sound samples, and my own recordings as a basis. I then could more easily edit, process, and layer them to fit the sound I was going for. This way, I made variations of the enemy death sound by layering the preexisting sound in-game and layering my processed vocal recordings on top of that. Similarly, the UI sounds for when the mouse hovers over and then clicks a button where edited sounds of castanets, percussion instrument samples I had saved from years ago.

Enemy Death SFX Variations

UI Click Button SFX

UI Hover Button SFX

Overall, despite being busy at the beginning of the sprint, I made good progress by the end of it that I am proud of. Even though composing music went a bit slower than it normally does, I still made some progress on that which I’ll be able to put into the game soon, and I made several sound effects using new strategies that made things easier and more creatively engaging for me to get done.

Hours Breakdown

- Audio Team Meetings: 3 hours

- Recording and Creating SFX: 6 hours

- Composing Music: 3 hours

- Total: 12 hours

Audio Team Meetings

The audio team had an especially productive and long meeting on

November 16th where we listened to all of the audio we had in

the game so far and finalized a list of those that needed to be

iterated on and those that had yet to be created. At this point,

only a few more sounds had to be added which was a great

feeling! It was a nice experience to go through all of the

sounds we had created in order to look at our progress and talk

about how things could be further improved, mostly my means of

mixing techniques. During the meeting, we were able to walk

through editing several of these sound effects together by

screen-sharing. We got to share different mixing strategies with

one another which was insightful.

We also did some planning for the voice acting recording. Since

we had to record within the following week which would’ve made

casting difficult, it was fortunate that Emily and Crystal were

willing and eager to be the voice actors. Crystal had her own

mic, but Emily didn’t and I didn’t have anything to let her

borrow. I had access to rent mics through the music school and

the Performing Arts Technology department I’m a part of, but if

I was going to go out of my way to reserve something, I wanted

to make sure we got the best recordings possible. In a later

meeting on November 24th, separate from our weekly Tuesday

meeting on the 23rd, we listened through all of the dialogue.

Recording Dialogue

I reserved the Audio Studio C at the Duderstadt Center for 3 hours for Emily and I to have plenty of time to practice and record her lines and for me to troubleshoot any potential challenges in the studio. We started by looking at the script that Crystal had sent to us, talking about how different lines might sound, and listening to some relevant examples of character voice acting in video games. It was still undecided which voices Crystal and Emily would do for the game, so Emily recorded voices for the two voiced characters since we had the time for it.

We cleared out the nearby isolation booth for us to record her

lines in a quieter and more acoustically treated space compared

to the studio’s main control room. I was able to set up the

studio so that her microphone was routed from the iso booth to

the control room where I was, and she was able to hear her voice

routed back into her headphones. I set up the headphone mix

mostly in case I wanted to process her voice during recording

and would want her to be able to hear how she sounded with the

effect in realtime, but I ended up not using any effects during

the recording session. However, sending her a headphone mix did

help so that we could listen back to her previous takes together

without her having to go back and forth between rooms after each

take. I stayed in the control room to record all of her takes. I

didn’t think there was any talkback system in Audio Studio C for

me to somehow send my voice to Emily for when we needed to talk

in between takes, so we simply called each other over the phone

to communicate between takes while we were in different rooms.

The setup for recording took me a little longer than expected

since I hadn’t worked in the Duderstadt studios for a while and

Audio Studio C had a few notable changes since I had last been

in there, but, in the end, things worked as we had hoped and the

recordings came out amazing!

We recorded on a Shure KSM44A large diaphragm condenser

microphone and an Aviom A-16II was used to send Emily the

headphone mix.

Selecting and Editing Dialogue

Once Emily and I had recorded her lines for the two potential

characters she was voicing, Crystal recorded her own takes for

these two characters plus lines for the narrator. After lines to

all of their lines, we decided that we would use Emily’s voice

for the two characters and Crystal’s for the narration. Emily

had an impressive range in her character voices and Crystal’s

delivery of the narration was perfect for telling the story.

With this I listened through all of the takes on my own and

selected the best delivery for each line in the dialogue. After

cutting and naming these selected takes, I applied a series of

noise reduction, EQ-ing, loudness matching, compression, gating,

and amplification to all of these audio files so that they sound

clear and consistent, especially since Emily and Crystal’s lines

were recorded in completely different locations and with

different mics.

Dialogue Sample - Emily as Revna

Narrative 1 BGM Rough Draft

During this sprint I also iterated on several sound effects and

created a rough draft BGM track for the first visual

novel-styled narrative scene.

I’m really happy with how the voice acting has turned out and

with my great progress this sprint!

Hours Breakdown

- Audio Team Meetings: 4 hours

- Creating SFX: 2 hours

- Recording Dialogue: 3 hours

- Editing Dialogue: 3 hours

- Composing Music: 3 hours

- Total: 15 hours

Audio Team Meetings

The audio meetings during these Gold sprints focused mostly on mixing and polishing what we already had in the game along with adding in the very last pieces. Our first meeting of the Gold I sprint was spent going through the game together and discussing which sounds should be rebalanced just in terms of volume and which ones should be edited and mixed. For example, I ended up re-exporting some music tracks so that they would loop better in-game. We were able to have a game audio professional, Jeffery “Pumodi” Brice, playtest the game for some more audio-specific feedback. I was unable to attend the meeting, but Crystal was able to and took great notes of Pumodi’s comments. From this playtest, we were able to make a list of all of the things we could improve on and prioritize them. One of the main criticisms was how the main enemy death sound effects would sound too much like indiscernible digital noise when repeated at a quick frequency when the game was played at 3 times speed.

Creating and Reiterating on SFX

The addition of another special type of enemy, the ceramic type, called for additional enemy sound effects. I used several samples of glass and ceramic breaking sounds to make the damage and break sound effects for these enemies which, upon breaking, release additional enemies.

Ceramic Damage and Break SFXs

I also had to reiterate the variations of the enemy death sound effects to make them a bit less consistent. These sounds are made up of a layer of a “whoosh” sound recorded from a stick swinging in the air and another layer of a processed vocal sample of mine, a unique one for each variation. The whoosh layer was the main issue since it was too consistent, so I tried to make it a bit softer and added additional effects to add additional variation. Crystal also incorporated some subtle pitch variation when she implemented these new sounds into the game.

Updated Enemy Death SFX Variations

I also added an ocean ambiance sound effect to play softly in the background of gameplay.

Ocean Ambience

Creating and Reiterating on SFX

I made a new version of the main menu theme with improved mixing and I switched out the main melody instrument to something that is a little easier on the ears.

Updated Menu Music

I also finalized the music for the first narrative scene.

Updated Narrative 1 Music

As I wrap up my first semester with the studio, I’m really glad I got to have this experience! It was really fun working with the rest of the studio and I learned a lot in terms of game audio. I’m proud of our work and the final product!

Hours Breakdown

- Audio Team Meetings: 3 hours

- Creating/Editing SFX: 5 hours

- Editing Music: 4 hours

- Total: 12 hours